Introduction

In today’s fast-paced digital landscape, businesses are constantly seeking innovative solutions to streamline their operations, improve scalability, and ensure the resilience of their applications. Amazon Web Services (AWS) Elastic Kubernetes Service (EKS) emerges as a beacon of efficiency and scalability in this quest, providing a managed container service that allows you to run Kubernetes on AWS without the need for installing and operating your own Kubernetes control plane.

AWS EKS

AWS EKS is a managed service that makes it easier for you to use Kubernetes on AWS. Kubernetes, an open-source system for automating the deployment, scaling, and management of containerized applications, is at the heart of the modern development ecosystem. EKS abstracts away the complexity of setting up a Kubernetes cluster from scratch, offering a seamless integration path for your Kubernetes workloads to leverage the vast services and infrastructure of AWS.

Features and Benefits

- Fully Managed Kubernetes Control Plane

- AWS EKS takes away the operational burden of managing the Kubernetes control plane, which includes the API server and etcd database. AWS handles the setup, scalability, and availability, allowing you to focus on your applications.

- Integration with AWS Services

- EKS is deeply integrated with AWS services like Elastic Load Balancing (ELB), Amazon VPC, and IAM for security, providing a comprehensive, secure, and scalable environment to run your containerized applications.

- Scalability and Reliability

- EKS automatically scales the Kubernetes control plane across multiple Availability Zones (AZs) to ensure high availability. It also integrates with Amazon EC2 Auto Scaling Groups to automatically adjust the number of nodes in your clusters.

- Security

- Security in EKS is multilayered, incorporating IAM for authentication, VPC for network isolation, and encryption options for data at rest and in transit, ensuring that your clusters and applications are secure.

- Hybrid Cloud Capabilities

- With EKS, you can create Kubernetes clusters that span AWS and on-premises environments, providing a consistent platform for managing applications, irrespective of their deployment environment.

Why EKS?

AWS EKS stands out in the cloud ecosystem for its robustness, scalability, and integration capabilities. Unlike self-managed Kubernetes setups, EKS reduces the operational complexity and overhead, making it an attractive option for enterprises and developers alike. It provides a scalable and highly available architecture that leverages AWS’s proven infrastructure and services, ensuring that your applications are resilient and performant.

Getting Started with AWS EKS (with Terraform)

Getting started with EKS is straightforward. You can create an EKS cluster using the AWS Management Console, AWS CLI, or AWS SDKs. Once your cluster is up, you can configure your worker nodes and deploy your applications using Kubernetes tools like kubectl. Due to the repetivie nature of DevOps, i wanted to use Terraform (and Terraform Modules) to showcase the steps to create a demo EKS cluster that run pods with vanilla Nginx configuration.

Modules

VPC

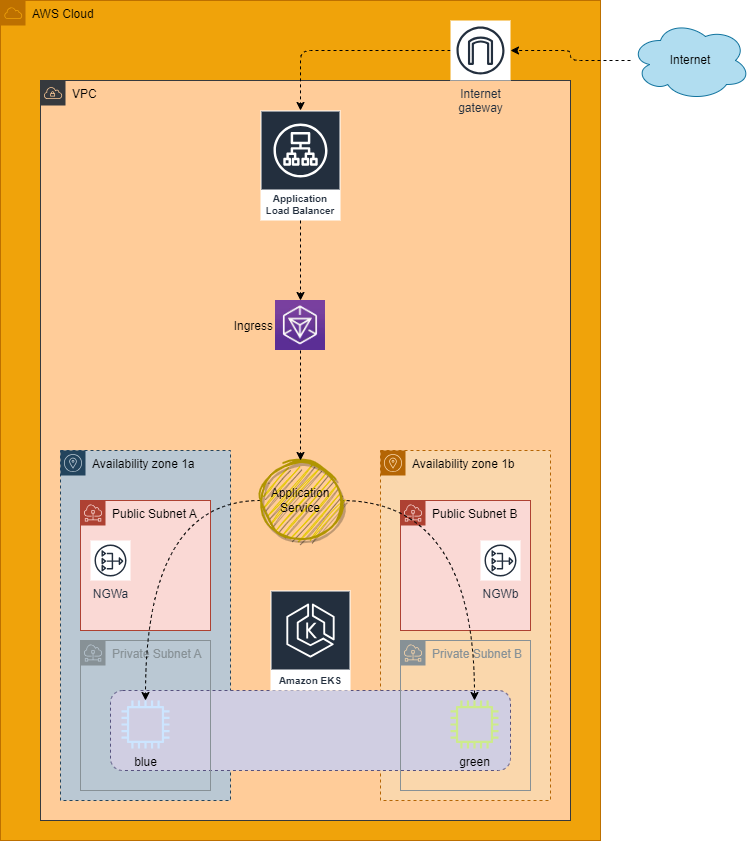

- Here I am creating the network environment where the EKS Cluster will run in

- I decided to use only two availability zones (AZ) hosting two public and two private subnets, respectively

- I decided to spin up a NGW in each AZ

- Notice the tags provided to the subnets as those tags are mandatory and will be used internally for the load balancer later

- I am also outputing the VPC ID and private subnets to be used later

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "5.5.3"

name = "eks-vpc"

cidr = "10.0.0.0/16"

azs = ["us-east-1a", "us-east-1b"]

private_subnets = ["10.0.1.0/24", "10.0.2.0/24"]

public_subnets = ["10.0.101.0/24", "10.0.102.0/24"]

enable_nat_gateway = true

public_subnet_tags = {

"kubernetes.io/role/elb" = 1

}

private_subnet_tags = {

"kubernetes.io/role/internal-elb" = 1

}

tags = {

Environment = "dev"

}

}

output "vpc_id" {

description = "The ID of the VPC"

value = module.vpc.vpc_id

}

output "private_subnets" {

description = "List of IDs of private subnets"

value = module.vpc.private_subnets

}EKS Cluster

- Here i am creating the EKS cluster with two node groups, blue and green, each with a minimum number of EC2s it should run, the maximum number of EC2s it should run, along with how many I want to run at any one time.

- The EC2s will be a specific family size and run on demand

- I am outputing the name of the EKS cluster, the ARN of the OIDC provider, the cluster endpoint, and the certificate data needed to talk to the cluster (all needed for the creation of the application later)

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "19.21.0"

cluster_version = "1.29"

cluster_name = "eks-cluster"

cluster_endpoint_public_access = true

create_kms_key = false

create_cloudwatch_log_group = false

cluster_encryption_config = {}

cluster_addons = {

coredns = {

most_recent = true

}

kube-proxy = {

most_recent = true

}

vpc-cni = {

most_recent = true

}

aws-ebs-csi-driver = {

most_recent = true

}

}

vpc_id = var.vpc_id

subnet_ids = var.private_subnets

control_plane_subnet_ids = var.private_subnets

# Managed Node Group

eks_managed_node_group_defaults = {

instance_types = ["t2.medium"]

iam_role_additional_policies = {

AmazonEBSCSIDriverPolicy = "arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy"

}

}

eks_managed_node_groups = {

blue = {

min_size = 1

max_size = 2

desired_size = 1

}

green = {

min_size = 1

max_size = 2

desired_size = 1

instance_types = ["t3.medium"]

capacity_type = "ON_DEMAND"

}

}

tags = {

env = "dev"

}

}

output "cluster_name" {

description = "The name of the EKS cluster"

value = module.eks.cluster_name

}

output "oidc_provider_arn" {

description = "The ARN of the OIDC Provider if `enable_irsa = true`"

value = module.eks.oidc_provider_arn

}

output "cluster_endpoint" {

description = "Endpoint for your Kubernetes API server"

value = module.eks.cluster_endpoint

}

output "cluster_certificate_authority_data" {

description = "Base64 encoded certificate data required to communicate with the cluster"

value = module.eks.cluster_certificate_authority_data

}ALB Controller

- Here i am creating a role, a policy to attach to the role, along with a service account for the load balancer controller

- Once those are in place, i am creating the load balancer controller with Helm and marrying the role to the load balancer

module "lb_role" {

source = "terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks"

role_name = "${var.env_name}_eks_lb"

attach_load_balancer_controller_policy = true

oidc_providers = {

main = {

provider_arn = var.oidc_provider_arn

namespace_service_accounts = ["kube-system:aws-load-balancer-controller"]

}

}

}

# Load balancer Controller Service Account

resource "kubernetes_service_account" "service-account" {

metadata {

name = "aws-load-balancer-controller"

namespace = "kube-system"

labels = {

"app.kubernetes.io/name" = "aws-load-balancer-controller"

"app.kubernetes.io/component" = "controller"

}

annotations = {

"eks.amazonaws.com/role-arn" = module.lb_role.iam_role_arn

"eks.amazonaws.com/sts-regional-endpoints" = "true"

}

}

}

# Install Load Balancer Controler via Helm

resource "helm_release" "lb" {

name = "aws-load-balancer-controller"

repository = "https://aws.github.io/eks-charts"

chart = "aws-load-balancer-controller"

namespace = "kube-system"

depends_on = [

kubernetes_service_account.service-account

]

set {

name = "region"

value = var.main-region

}

set {

name = "vpcId"

value = var.vpc_id

}

set {

name = "image.repository"

value = "602401143452.dkr.ecr.${var.main-region}.amazonaws.com/amazon/aws-load-balancer-controller"

}

set {

name = "serviceAccount.create"

value = "false"

}

set {

name = "serviceAccount.name"

value = "aws-load-balancer-controller"

}

set {

name = "clusterName"

value = var.cluster_name

}

}Demo Application (vanilla NGINX container/pod)

- To keep it simple, I created modules needed for the application in this part of the code; however you could keep your modules together with the others above if needed

- At first, I am creating a Kubernetes namespace where we will be deploying the application

- Then into the security portion, I am creating a policy, a role with the policy attached, which will be used by the application

- I will finally create a service account that the application will be using to access AWS services

- Once the stage is set for the application, I will deploy the application with NGINX as my container

- Once the application is deployed, I will create a service to expose the deployment of the application

- The last stage will be creating the ingress to be used to create the application load balancer

# Demo Application Namespace

resource "kubernetes_namespace" "demo-application-namespace" {

metadata {

annotations = {

name = "demo-application"

}

labels = {

application = "demo-nginx-application"

}

name = "demo-application"

}

}

# Demo Application Policy for Role

module "demo_application_iam_policy" {

source = "terraform-aws-modules/iam/aws//modules/iam-policy"

name = "${var.env_name}_demo_application_policy"

path = "/"

description = "demo Application Policy"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"ec2:Describe*"

],

"Effect": "Allow",

"Resource": "*"

}

]

}

EOF

}

# Demo Application Role

module "demo_application_role" {

source = "terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks"

role_name = "${var.env_name}_demo_application"

role_policy_arns = {

policy = module.demo_application_iam_policy.arn

}

oidc_providers = {

main = {

#provider_arn = var.oidc_provider_arn

provider_arn = module.eks.oidc_provider_arn

namespace_service_accounts = ["demo-application:demo-application-sa"]

}

}

}

# Demo Application Service Account

resource "kubernetes_service_account" "service-account" {

metadata {

name = "demo-application-sa"

namespace = kubernetes_namespace.demo-application-namespace.metadata[0].name

labels = {

"app.kubernetes.io/name" = "demo-application-sa"

}

annotations = {

"eks.amazonaws.com/role-arn" = module.demo_application_role.iam_role_arn

"eks.amazonaws.com/sts-regional-endpoints" = "true"

}

}

}

# Demo Application Deployment

resource "kubernetes_deployment_v1" "demo_application_deployment" {

metadata {

name = "demo-application-deployment"

namespace = kubernetes_namespace.demo-application-namespace.metadata[0].name

labels = {

app = "nginx"

}

}

spec {

replicas = 2

selector {

match_labels = {

app = "nginx"

}

}

template {

metadata {

labels = {

app = "nginx"

}

}

spec {

service_account_name = kubernetes_service_account.service-account.metadata[0].name

container {

#image = "httpd:latest"

#name = "apache"

image = "nginx:latest"

name = "nginx"

resources {

limits = {

cpu = "0.5"

memory = "512Mi"

}

requests = {

cpu = "250m"

memory = "50Mi"

}

}

liveness_probe {

http_get {

path = "/"

port = 80

http_header {

name = "X-Custom-Header"

value = "Awesome"

}

}

initial_delay_seconds = 3

period_seconds = 3

}

}

}

}

}

}

# Demo Application Service

resource "kubernetes_service_v1" "demo_application_svc" {

metadata {

name = "demo-application-svc"

namespace = kubernetes_namespace.demo-application-namespace.metadata[0].name

}

spec {

selector = {

app = "nginx"

}

session_affinity = "ClientIP"

port {

port = 80

target_port = 80

}

type = "NodePort"

}

}

# Demo Application Ingress

resource "kubernetes_ingress_v1" "demo_application_ingress" {

metadata {

name = "demo-application-ingress"

namespace = kubernetes_namespace.demo-application-namespace.metadata[0].name

annotations = {

"alb.ingress.kubernetes.io/scheme" = "internet-facing"

}

}

wait_for_load_balancer = "true"

spec {

ingress_class_name = "alb"

default_backend {

service {

name = "demo-application-svc"

port {

number = 80

}

}

}

rule {

http {

path {

backend {

service {

name = "demo-application-svc"

port {

number = 80

}

}

}

path = "/app1/*"

}

}

}

tls {

secret_name = "tls-secret"

}

}

}Main Block

- This block will be calling all the modules listed above which will run through all the code above and output the DNS A record for the Application Load Balancer, which can be copied and pasted into a web browser for testing.

# VPC Module

module "vpc" {

source = "./modules/0-vpc"

main-region = var.main-region

profile = var.profile

}

# EKS Cluster Module

module "eks" {

source = "./modules/1-eks-cluster"

main-region = var.main-region

profile = var.profile

vpc_id = module.vpc.vpc_id

private_subnets = module.vpc.private_subnets

}

# AWS ALB Controller

module "aws_alb_controller" {

source = "./modules/2-aws-alb-controller"

main-region = var.main-region

env_name = var.env_name

cluster_name = var.cluster_name

vpc_id = module.vpc.vpc_id

oidc_provider_arn = module.eks.oidc_provider_arn

}

output "load_balancer_dns_name" {

value = kubernetes_ingress_v1.demo_application_ingress.status.0.load_balancer.0.ingress.0.hostname

}Logical

Conclusion

AWS EKS represents a significant leap forward in simplifying containerized application deployment and management at scale. By leveraging the power of Kubernetes and the breadth of AWS services, EKS provides a robust, scalable, and secure platform for modern application development. Whether you are an enterprise looking to modernize your applications or a developer eager to deploy scalable applications, AWS EKS offers a compelling solution to meet your needs.